The Latest Episode

Of all the talk of artificial intelligence on social media this week, one instance has stuck in my mind.

The topic was getting AI to persuasively explain changes marked in a draft you're sending to the counterparty. In this case, the change was simple enough—changing from two years to four years the period during which confidential information is protected under a confidentiality agreement.

The post included the 72-word prompt used by the person posting. It seemed entirely sensible to me, but like all prompts, it had the slightly surreal quality of someone earnestly chanting something while rubbing a magic lamp.

The post also contained the explanation the AI spat out:

Extended Duration Justification: The adjustment from a two-year to a four-year expiration term for the MNDA is proposed to ensure a more stable framework for our ongoing collaboration. Given the complexity and potential long-term nature of our joint projects, a longer confidentiality period is prudent to protect both parties' interests, intellectual property, and the integrity of our shared endeavours. This extension also reflects our commitment to a sustained partnership and provides ample time for the full realization and commercialization of our collaborative efforts. We believe this amendment supports a mutual interest in fostering a secure, trusting environment conducive to innovation and success.

It sounds like something recited by a filibustering politician at hearings over a municipality's public-works budget. Behold the abstract nouns (a reliable sign of stultifying prose): duration, justification, adjustment, expiration, framework, collaboration, complexity, period, integrity, endeavours, extension, commitment, partnership, realization, commercialization, efforts, interest, environment, innovation, success. It's bewilderingly bad.

Here's what I would have said: "Some of our confidential information is particularly sensitive, so we'd like it to be protected for four years, not two years. Since it's a mutual NDA, you'd get the benefit of that extra protection too." The End.

No doubt AI true believers will say the poster's 72 words weren't good enough, that it's all in how you prompt. In other words, it's all in how you rub the magic lamp. What level of cajoling is required so the AI doesn't deliver something bad?

The Broader Context

For a while now, I've sporadically had a look at how AI is applied to contracts. The elephant in the room is that if you train AI on the systemic dysfunction of mainstream contract drafting, the AI will serve up that dysfunction.

In this blog post I consider Allen & Overy's new AI contract-drafting tool, and their reassurance that all will be well if you train the AI on your "gold-standard precedents." That led me to observe that "gold-standard precedents are as rare as albino alligators."

Getting more specific, in this blog post I look at erratic wording in an AI-generated redline. And in this blog post, I consider the notion of using AI to summarize contracts, and I conclude that we shouldn't be summarizing contracts at all. Instead, we should be outlining them, and outlining requires too much judgment to cede that task to AI.

I leave to others the question of how best to use ChatGPT and the like. I'm willing to believe it can be applied to low-level, repetitive, predictable tasks. If you can replace 95% of your help-desk staff with a chatbot, then go for it, I suppose. But everything I've seen about applying AI to contracts is deeply unpromising.

Part of the problem is that although there's nothing about using AI that requires us to be subservient to it, that's been an undercurrent in much of the discussion I encounter. We're left rubbing the magic lamp.

The New Boss, The Old Boss

The new boss (AI) shares some characteristics with the dysfunctional old boss—the copy-and-paste system.

By turning contract language into something to be copied instead of something to be understood, the copy-and-paste system has wrought slow-motion chaos. And faced with chaos, the legal establishment generally offers post-hoc rationalizations, in the form of addled conventional wisdom. My self-appointed task has been to dismantle the conventional wisdom. The notion of a hierarchy of efforts provisions is bogus? Check. The phrase represents and warrants is pointless and confusing? Check. The "successors and assigns" provision is useless? Check. I could go on and on.

We've had no alternative to copy-and-pasting, so the chaos was inevitable. Our failing lies in ignoring the chaos. By contrast, the current AI frenzy is entirely self-inflicted.

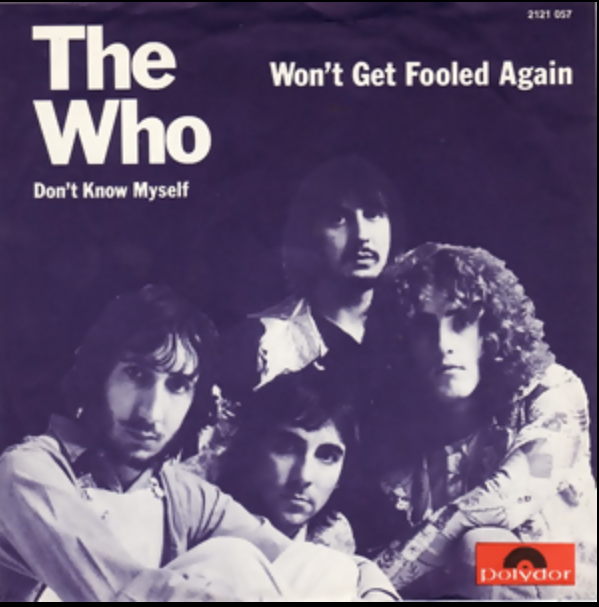

But what the AI frenzy shares with the copy-and-paste system is that both have resulted in our turning a blind eye to what's required for an effective contract process. I'm preoccupied with one part of that—fixing contract drafting. To do that, we need to turn into a commodity task the process of coming up with language to express deal points. And we need to rethink how we teach contract drafting. Compared with the collective cost of the ongoing futility, it would be a simple matter to take meaningful remedial steps. So we don't get fooled again.

.jpg?width=352&name=sven-mieke-fteR0e2BzKo-unsplash%20(1).jpg)